- The Product Prism

- Posts

- 🤖 📜 AI, Ethics, and Product

🤖 📜 AI, Ethics, and Product

Where Are PMs in the Conversation?

Insights

AI is moving faster than most roadmaps can keep up with. But while engineers are building and policy teams are reacting, many PMs are stuck in the middle or worse, sitting on the sidelines. So, where should product leaders show up in the conversation on AI and ethics?

Unlike legal or engineering, PMs understand tradeoffs across UX, business, and tech, making PMs uniquely positioned to flag ethical risks early, but only if they step up.

In this issue, I cover

The PM’s Ethical Minefield.

An Ethics Case Study.

A practical framework to assess ethical risk

🆕 AI Tool of the Week

Let’s dive in! 🚀

Introduction

Picture this: Your AI feature just boosted conversion by 30%. Champagne pops. Then, a user discovers it generates racist stereotypes when prompted with certain names. Viral outrage ensues. Your product is trending, but for all the wrong reasons.

This isn’t hypothetical. It’s happening daily. And while engineers tweak algorithms and execs fret over PR, product managers are caught in the crossfire.

The Product Manager’s Ethical Minefield.

1. Bias: The Silent Saboteur

What’s Happening:

AI models amplify societal biases (e.g., denying loans to minority groups, convicting black men in the court of law).Why PMs Own This:

PMs define use cases. A “job applicant scorer” feature isn’t harmless if it is trained on biased data.Fix:

Demand training data transparency from vendors.

Build “bias stress tests” into your QA.

2. Hallucinations: When AI Lies Confidently

What’s Happening:

Chatbots invent facts (e.g., citing fake court cases).PM Liability:

Users blame your product, not “the algorithm.”Fix:

Watermark AI outputs (e.g., “Verify this with human sources”).

Enterprise use cases should be built on Enterprise-verified data only with necessary guardrails.

Track hallucination rate like a critical KPI.

3. The Speed vs. Ethics Trap

Pressure:

“Ship fast or lose the market!”Reality:

Move too slow → irrelevant. Move too fast → disaster (see: Microsoft’s Tay chatbot).Fix:

Adopt Ethical Sprints (dedicate 10% of AI dev time to red-teaming).

Case Study: Google’s AI Principles in Action

The Backstory:

Project Maven, a Pentagon initiative using AI to analyse drone footage, became a flashpoint for ethical debate within Google and the broader tech industry. In early 2018, news broke that Google was supplying AI technology to the U.S. Department of Defence to help automate the identification of objects in drone surveillance videos. The revelation triggered widespread concern among Google employees, many of whom believed the company should not be involved in military operations or the business of war.

Shortly after, Google published a set of AI principles designed to guide the ethical development and deployment of artificial intelligence at Google. As of February 2025, Google updated its AI Principles, notably removing explicit pledges not to use AI for weapons or surveillance tools violating international norms.

The updated principles emphasise:

• Bold innovation

• Responsible development and deployment

• Collaborative process

Key commitments now include:

• Developing and deploying AI where the likely overall benefits substantially outweigh foreseeable risks.

• Ensuring appropriate human oversight, due diligence, and feedback mechanisms to align with user goals, social responsibility, and international law and human rights.

• Investing in safety and security research, technical solutions to address risks, and sharing learnings with the ecosystem.

• Rigorous design, testing, monitoring, and safeguards to mitigate unintended or harmful outcomes and avoid unfair bias.

• Promoting privacy and security, respecting intellectual property rights.

How can PMs Operationalise This Today?

PMs can operationalise these Google ethics today by:

Red teaming and scenario analysis to anticipate misuse or harm.

Continuous monitoring of deployed models for bias, security, and safety.

Stakeholder engagement with external experts, governments, and civil society to inform responsible AI development.

Internal training and education to ensure teams understand and apply responsible AI practices.

Checklists Over Good Intentions:

Every AI feature must pass a 7-point ethics checklist (e.g., “Does this reduce human autonomy?”).

Escalation Pathways:

PMs can halt launches via an “Ethics Andon Cord” (inspired by Toyota’s factory stop cords).

Tradeoff Transparency:

Publicly document dilemmas (e.g., “We prioritised privacy over personalisation here”).

The Outcome:

Slower AI releases but higher trust.

AI-Ethics Framework: “Wheel of Harm”

The "Wheel of Harm" framework is a practical, visual tool designed to help product teams proactively identify and prioritise ethical risks in AI/ML products. It transforms abstract ethical concerns into actionable categories, making it easier for PMs to spot red flags before shipping features.

Origins & Purpose

Created by: Mozilla Foundation + AI ethics researchers.

Goal: Move beyond vague "don’t be evil" slogans → provide concrete evaluation criteria.

Designed for: Product teams (not ethicists) to use in sprint planning and early-stage startups.

How It Works: 6 Spokes of Harm

Imagine a wheel with 6 segments. Each represents a harm category. Rate your AI product or feature (1-5) on each segment:

Physical Safety

"Could this physically hurt someone?"

Example: Autonomous delivery robots malfunctioning near pedestrians.

PM Action: Stress-test edge cases (e.g., icy roads).

Discrimination & Bias

"Does this treat groups unfairly?"

Example: Loan-approval AI rejecting women or minorities disproportionately.

PM Action: Audit training data for representation gaps.

Psychological Harm

"Could this damage mental health?"

Example: Social media algorithms promoting eating disorder content.

PM Action: Add friction (e.g., "Are you sure?" prompts for sensitive actions).

Loss of Agency

"Does this remove human control?"

Example: Workplace surveillance AI auto-penalising "unproductive" workers.

PM Action: Always include human override options.

Privacy Erosion

"Does this expose sensitive data?"

Example: Health chatbots storing unencrypted patient conversations.

PM Action: Anonymise data by default.

Social/Systemic Harm

"Could this widen inequality?"

Example: Job-search AI favoring Ivy League graduates.

PM Action: Partner with sociologists during design.

How to Use It: A 4-Step Process

Assemble Your Team: PM, engineer, designer, legal rep.

Score Each Segment: Rate harm risk per spoke (1=low, 5=high).

Prioritise: Focus on segments scoring ≥4.

Mitigate: Brainstorm fixes before coding.

Application in law enforcement: Facial Recognition Feature

Physical Safety: 2 (Low risk)

Discrimination: 5 (High risk: fails on darker skin tones)

Psychological Harm: 4 (If misidentifies suspects)

Loss of Agency: 3 (Users can’t correct errors easily)

Privacy: 5 (Biometric data leaks)

Social Harm: 4 (Could enable racial profiling)

→ Outcome: Team killed the feature until bias/accuracy improved.

Why you should adopt this:

Fits Agile Workflows: Takes 30 minutes in sprint planning.

Converts Ethics to Action: Replaces debates with scores.

Builds Consensus: Engineers/designers see risks visually.

Prevents Disasters: Catches issues like "bias by default."

Example: Wheel of Harm

Feature: AI-Powered Resume Scanner

Harm Category | Risk (1-5) | Mitigation Plan | Owner |

|---|---|---|---|

Physical Safety | 1 | N/A | |

Discrimination & Bias | 5 | Audit training data for gender/race gaps | PM |

Psychological Harm | 3 | Add "This is AI-suggested" label | Designer |

Loss of Agency | 4 | Allow manual edits to AI scores | Eng |

Privacy Erosion | 4 | Anonymize applicant data | Legal |

Social/Systemic Harm | 5 | Partner with DEI experts | CEO |

Final Thoughts

Ethics isn’t a "checklist"; it’s risk management for humanity. The Wheel of Harm gives PMs the language to say: "We can’t ship this until we fix spoke #2."

Want the full toolkit? I’ll DM you the:

Wheel of Harm PDF

Bias audit checklist

"Ethical Sprints" playbook

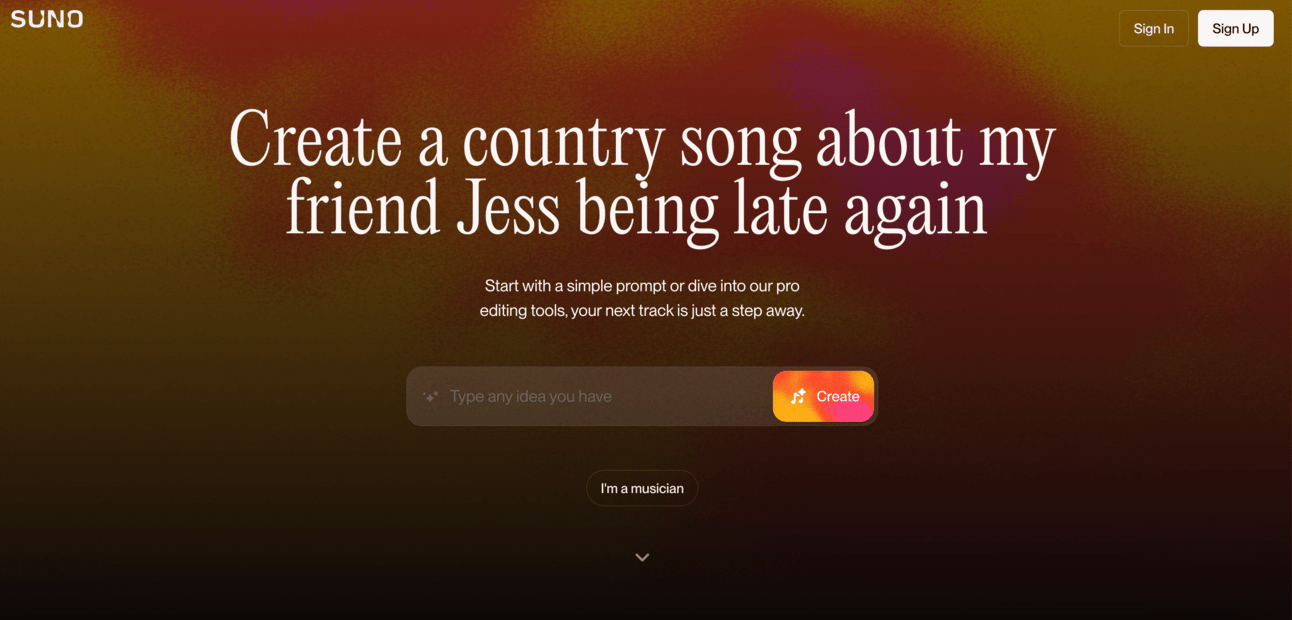

AI Tool of the Week: Suno AI

Link to Try: Suno.ai

What it is: An AI music generator that creates full songs (lyrics, vocals, instruments) from text prompts.

What it does:

Generates 1-2 minute original songs in any genre (pop, lo-fi, metal, etc.).

Creates custom lyrics or uses your own.

Use Cases:

Content creators needing royalty-free background music.

Marketers creating jingles for ads.

Artists brainstorming melodies.

Lovers 😍 looking to create melodies for loved ones.

Cost:

Free tier: 50 credits renew daily (10 songs)

Pro tier: 2,500 credits (up to 500 songs), refreshes monthly.

If you are wondering about Suno AI output, listen to this tune I created with Suno here

Suno AI

Going for An Interview?

🔗 Try Interview Buddy (v3): https://mock-interview-buddy.streamlit.app/

[It’s free, so there’s a general bucket daily limit]

To serve you better and create relevant content, please take a few minutes to complete this Survey. Thank you!